Again, consider the general constrained optimization problem

Goal: design a simple algorithm, based on first-order information to solve (1).

Recall from Chapter 4 that, for unconstrained problems () with differentiable objective, one simple algorithm is gradient descent (GD):

For constrained problems, a natural generalization is projected gradient descent (PGD), which combines GD with (Euclidean) projection onto .

Projection onto a set¶

Comments

computing is itself an optimization problem

returns the closest point(s) (in Euclidean norm) to that belongs to the set

when is closed and convex the projection is unique

if , then

in many important cases, can be computed explicitly. Example

Projected gradient algorithm¶

Typical step-size strategies

fixed-step size for all

backtracking linesearch (determine at each iteration based on sufficient decrease condition)

Example¶

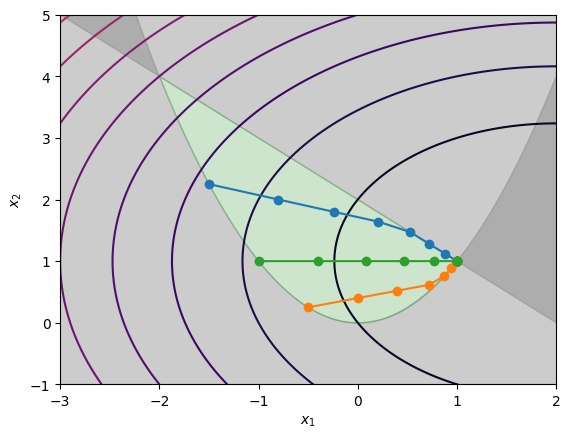

Consider the optimization problem

First, let us plot the objective function and feasible set.

Source

import numpy as np

import matplotlib.pyplot as plt

# Objective function

def f(x, y):

return (x - 2)**2 + (y - 1)**2

# Gradient

def dfdx(x, y):

return np.array([2*(x - 2), 2*(y - 1)])

# Constraint boundaries

def c1(x):

return x**2 # represents x^2 - y < 0

def c2(x):

return 2 - x # represents x + y < 2

# Ranges

xs = np.linspace(-5, 5, 400)

ys = np.linspace(-5, 5, 400)

X, Y = np.meshgrid(xs, ys)

# Prepare figure

plt.figure()

# Forbidden region from constraint 1: y < x^2

plt.fill_between(xs, c1(xs), ys.min(), color="gray", alpha=0.4,

label="Forbidden by constraint 1")

# Forbidden region from constraint 2: y > 2 - x

plt.fill_between(xs, c2(xs), ys.max(), color="gray", alpha=0.4,

label="Forbidden by constraint 2")

# Feasible region: x^2 < y < 2 - x

plt.fill_between(xs, c1(xs), c2(xs), where=c2(xs) > c1(xs),

color="green", alpha=0.2,

label="Feasible region")

# Contour of objective function

Z = f(X, Y)

plt.contour(X, Y, Z, cmap="inferno", levels=20) # thermal-like colormap

# Global minimizer

plt.scatter([1], [1], s=40, label="Global minimizer (1,1)")

# Labels and limits

plt.xlabel("$x_1$")

plt.ylabel("$x_2$")

plt.xlim(-3, 2)

plt.ylim(-1, 5)

plt.legend()

plt.show()

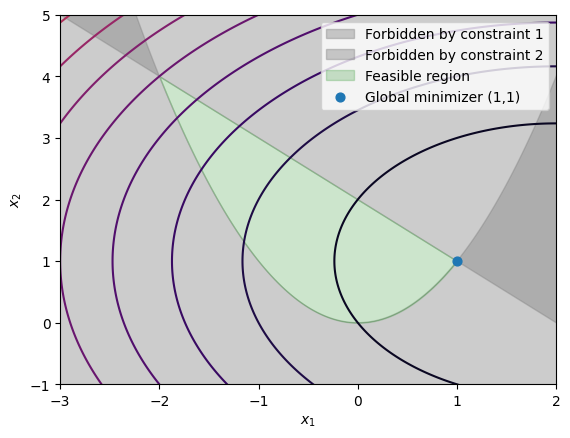

This can now visualize the trajectory of PGD for various starting points. In this example, we cannot compute easily the projection onto , so that we compute it using a numerical solver (CVXPy, see in a few sections). The implementation is very basic, uses fixed step-size, and stops after a finite a number of iterations. Of course, we can do better, but that’s just to demonstrate how PGD forces iterates to be feasible by projecting the usual GD step onto .

Source

import numpy as np

import cvxpy as cp

# Projection onto the constraints

def projConstraints(x):

z = cp.Variable(2)

objective = cp.Minimize(cp.sum_squares(x - z))

constraints = [

z[0]**2 - z[1] <= 0, # z1^2 - z2 <= 0

z[0] + z[1] - 2 <= 0 # z1 + z2 <= 2

]

problem = cp.Problem(objective, constraints)

problem.solve(solver=cp.SCS, verbose=False)

return z.value

# Projected Gradient Method

def ProjectedGradient(xinit, alpha, niter=10):

xn = np.zeros((2, niter + 1))

xn[:, 0] = xinit

for n in range(niter):

grad = dfdx(xn[0, n], xn[1, n]) # gradient at current iterate

x_next = xn[:, n] - alpha * grad

xn[:, n+1] = projConstraints(x_next)

return xn

zn = ProjectedGradient(np.array([-1.5, 1.5**2]), 0.1, niter=10)

zn2 = ProjectedGradient(np.array([-0.5, 0.5**2]), 0.1, niter=10)

zn3 = ProjectedGradient(np.array([-1.0, 1.0]), 0.1, niter=10)

# plotting

xs = np.linspace(-5, 5, 400)

ys = np.linspace(-5, 5, 400)

X, Y = np.meshgrid(xs, ys)

plt.figure()

# Forbidden regions

plt.fill_between(xs, c1(xs), ys.min(), color="gray", alpha=0.4)

plt.fill_between(xs, c2(xs), ys.max(), color="gray", alpha=0.4)

# Feasible region

plt.fill_between(xs, c1(xs), c2(xs), where=c2(xs) > c1(xs),

color="green", alpha=0.2)

# Objective contours

Z = f(X, Y)

plt.contour(X, Y, Z, cmap="inferno", levels=20)

# Trajectories

plt.plot(zn[0], zn[1], marker="o")

plt.plot(zn2[0], zn2[1], marker="o")

plt.plot(zn3[0], zn3[1], marker="o")

plt.xlim(-3, 2)

plt.ylim(-1, 5)

plt.xlabel("$x_1$")

plt.ylabel("$x_2$")