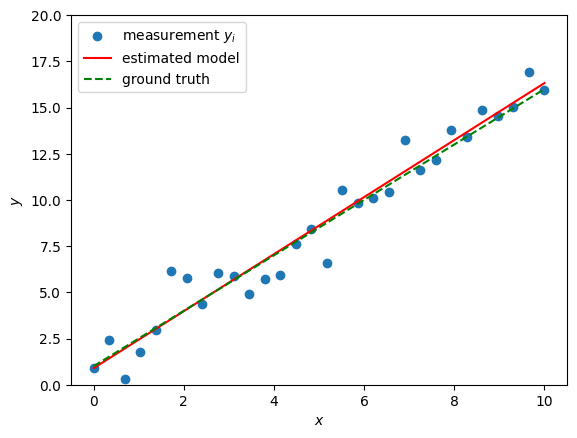

Let us assume we are observing some data as a function of a variable as depicted in the following plot:

Source

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(2025)

beta0 = 1

beta1 = 1.5

n = 30

x = np.linspace(0, 10, n)

y = beta0 + beta1 * x + 1.2*np.random.randn(n)

plt.scatter(x, y, label="measurement $y_i$")

plt.xlabel(r"$x$")

plt.ylabel(r"$y$")

plt.ylim(0, 20)

plt.legend()

plt.show()

Formulating the optimization problem¶

Suppose we are interested in finding a straightforward mathematical relationship that links the input (or explanatory) variables to the observed data points . In other words, we want to model how the measurements depend on the values of . This process is known as regression analysis, and in this example, we will focus on linear regression, which assumes that the relationship between and can be well-approximated by a straight line, or in mathematical terms:

Let’s construct an objective function in the parameters . A first simple choice is to measure the error (or residual) between the model and the observations using a quadratic function

leading to the total model error

For a fixed data vector and known explanatory variables , we obtain the following unconstrained optimization problem in terms of :

This is a linear least squares problem. This type of problem is found in many applications, such as engineering, econometrics, image processing, etc.

Computing the solution¶

Linear regression is widely used across many fields, and there are numerous readily available tools for computing solutions. The goal of this chapter is to look under the hood of such tools. In the meantime, here’s how to solve the problem (4) using scikit-learn:

from sklearn.linear_model import LinearRegression

# Reshape x for scikit-learn

X = x.reshape(-1, 1)

model = LinearRegression()

model.fit(X, y)

# Extract coefficients

beta0_hat = model.intercept_

beta1_hat = model.coef_[0]

print(f"Estimated coefficients: beta0 = {beta0_hat:.2f}, beta1 = {beta1_hat:.2f}")

print(f"Ground truth coefficients: beta0 = {beta0:.2f}, beta1 = {beta1:.2f}")

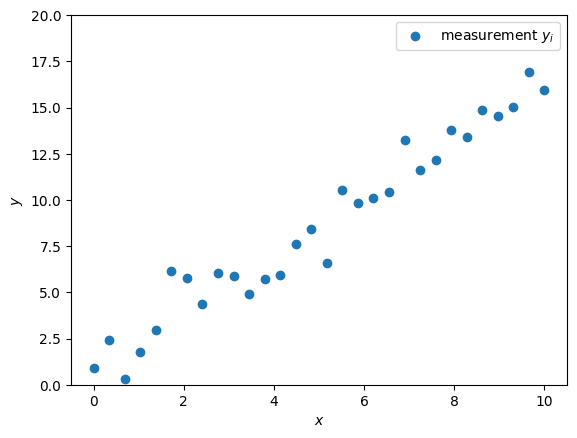

# Display the fitted line

plt.scatter(x, y, label="measurement $y_i$")

plt.plot(x, beta0_hat + beta1_hat * x, color="red", label="estimated model")

plt.plot(x, beta0 + beta1 * x, color="green", linestyle='--', label="ground truth")

plt.xlabel(r"$x$")

plt.ylabel(r"$y$")

plt.ylim(0, 20)

plt.legend()

plt.show()Estimated coefficients: beta0 = 0.89, beta1 = 1.54

Ground truth coefficients: beta0 = 1.00, beta1 = 1.50